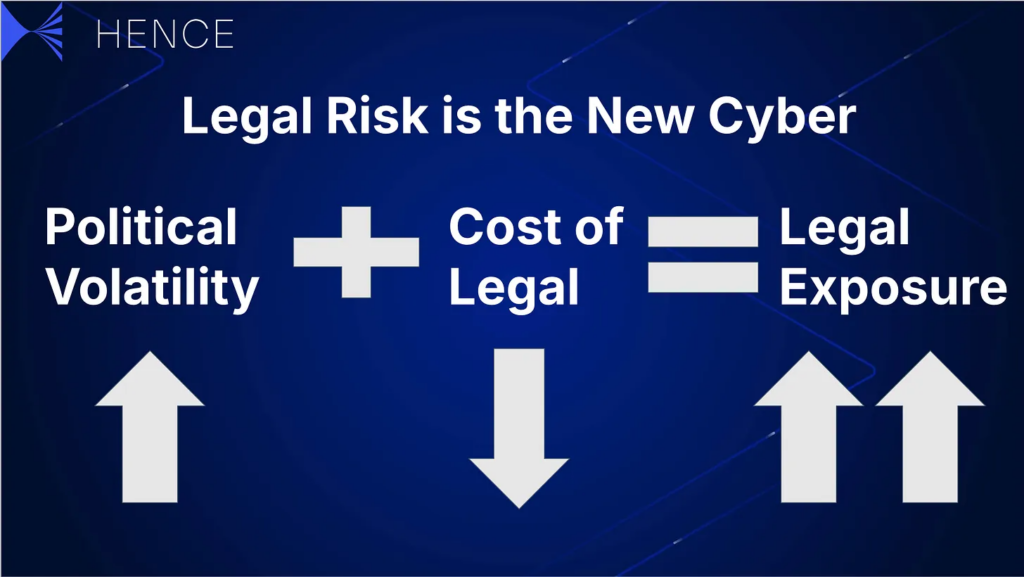

Last week, we put forward in HBR a fairly straightforward argument but one that hadn’t really been made before: If political volatility is going up and the cost of legal actions (due to AI) is going down, then legal exposure of companies will go way up. This will likely give rise to a flood of legal attacks by everyone against everyone else. Because if it costs nothing to try to catch your competitor doing something wrong, you’d probably try to do that. As a result, we expect legal risk to mutate from a big-ticket sometimes risk to an ever-present risk characterized by high-volumes of smaller-ticket activity.

Lawyers love analogistic reasoning, thus, it shouldn’t have been a surprise that likening legal risk to cyber risk elicited some reactions. What was most surprising was that not one public comment on social media about our argument was a rejection; in fact, it was all new interesting implications or supporting evidence that bolstered the argument. As someone who publishes my opinion more often than is healthy, this was a first.

Legal Risk is a Board Level Problem

One question I got was why we published it in a business magazine rather than a legal magazine. The legal industry has phenomenal press outlets that cover big ideas and generate conversations within the sector. The fact that the question was posed underscores how few voices there are attempting to amplify messages out of the legal discourse and into the broader business ecosystem.

Solving for novel risks will require new forms of preparedness and collaboration within corporations – approaches that might need to be blessed by the CEO or other business leaders. When we stepped back and thought about the concept of legal-as-cyber-risk, it occurred to us that this was squarely one of those topics. So we decided to place the piece with the top business journal in order to get it into the hands of CEOs and onto board agendas, as the rest of the C-suite and board will be called upon by legal for support and strategic guidance.

Failure to get broader audiences engaged on legal risk means suffering the downside of lack of resources and too-narrow focus. Imagine if the cyber-security discourse only happened in IT journals. The C-suite would be woefully underprepared for a cyber-attack.

Reactions

In effect, the piece argued that cheap legal actions driven by generative AI will trigger a flood of legal complaints directed at businesses. Some will be malicious, others will be completely fair exercises of rights by customers who might not have otherwise done so. Still others may be politically motivated by governments and some may be done simply for sport. This feels a lot like cyber-attacks and our piece went in depth on lessons from the cyber industry for countering such threats. I won’t spend more time summarizing; you should just read it here.

But I will highlight a few of the interesting reactions it drew.

AI changes everything

Andrew Cooke, GC at TravelPerk, included our argument about the weaponization of law as one of five basic assumptions about the (near) future state of legal – the others being that all forms of legal deliverable will be available on demand through AI; that consumers won’t tolerate any friction in legal products in the future; that AI will outpace regulators, creating moats for organisations that can navigate regulatory complexity; and that the AI bubble won’t burst.

A rise in adventurous litigation

Ross McNairn, CEO of AI productivity suite Wordsmith, noted that their clients have seen 4-5X increases in “adventurous litigation in the past 12 months” mostly driven by AI-generated requests especially in privacy, where there are strict deadlines and strict liability for not responding.

This is a lot like the Treasury Department examples we offered in the HBR article. By law Treasury had to read the 120,000 rule comments submitted to it, whether generated by AI or not. This is the same for many types of legal actions that generate a legally-required response.

Tech will identify targets

Christopher Suarez, IP and Technology Partner at Steptoe, agreed with the risk from the perspective of an IP litigator, noting that “Litigators on both sides of the “v” will need to be ready!” He offered the example of how it will be easier for “plaintiffs to identify prospective targets, for example, in patent litigation, where it might be easier to scour the internet for evidence of use and create infringement charts using an AI in a matter of seconds, as opposed to hiring teams of researchers to manually identify targets and assemble charts.”

Insurance carries much of the risk

Steven Barge-Siever, founder of a stealth AI insuretech broker, noted that “The biggest loser in the logical extreme of this situation will be insurance companies…to combat higher frequency, you will likely see higher deductibles (retentions) by insurance companies.”

It is an interesting coincidence that we’re having this debate as plaintiff-tech firm EvenUp just become a unicorn via tech to help plaintiff firms become more successful. Their goal of helping “20 million injury victims in the U.S. achieve fairer outcomes each year” implies a lot of claims. And while I agree that insurance will bear a lot of downside in the first instance—and customers will find themselves carrying more risk than they might like as a result of policy shifts —I do think new insurance products will be innovated to combat the legal flood (as occurred with the development of cyber insurance).

The legal ecosystem can’t save you

David Curran, Co-Chair of the Sustainability and ESG Practice at Paul Weiss noted that “The legal ecosystem is ill prepared for the brave new world that is right on our doorsteps. We’ve found that organizations struggle to “predict the present” (a phrase I attribute to Richard J. Cellini) let alone plan for the future. Byzantine structures, tactics and strategies built to address a slower moving world simply cannot keep pace with the tools and techniques that will be employed.”

David is absolutely right that the pace of threats will outpace our current preparedness. To me, this is because government will underinvest in the tools to protect companies through public goods like more generalized threat detection. As we noted in the piece, cyber criminals are criminals but people using offensive legal action are working legally through the legal system. This is also because companies often underinvest in risk mitigation until a threat seems more obvious. We are sounding the alarm early; it will be interesting to see who hears it.

Individual empowerment may lead to overwhelmed courts

Michael Okerlund, a long-time GC who is now CEO of CloudCourt, responded about how he thought AI would cause litigation and adversarial pre-litigation processes to surge and how this would strain judicial resources. As he commented:

Once the public (and especially pro se plaintiffs) better understand how they can use AI to engage in these processes, they will take advantage of it. Take insurance policies: as the saying goes, ‘the big print giveth, and the small print taketh away’—a tactic many insurance companies use to deny claims. Today, when you receive a claim denial letter, you can use an AI tool to review the dense policy terms you don’t understand, assess your facts, and quickly draft a demand letter or complaint. Historically, the difficulty of doing these things has suppressed certain kinds of litigation. AI is now removing those barriers, particularly in areas like consumer rights, insurance disputes, and small claims. AI is going to empower more people to engage with the legal system. The likely result? My prediction is increased caseloads, leading to longer wait times, and a strain on judicial resources in courts unprepared for the influx. While this could enhance access to justice, it will also present a major challenge for judicial efficiency. That’s my prediction, at least.

We’ll soon fight AI with AI

So how do you defend yourself? In the article we offered a number of methods including pooling of vulnerabilities, linking legal and corporate strategy to examine your footprint, and using AI to monitor the landscape. Shlomo Klapper of LearnedHand, a company building AI tools to help judges, suggested the productization of these and other approaches with the following list:

1. Automated Legal Defender: An AI tool to filter low-value, high-volume threats—frivolous claims, bogus violations—freeing up your legal team to focus on real battles. It’s not ruthless, just smart.

2. Vulnerability Detection and Triage: A system that scans your legal landscape, spotting risks before they hit. Prioritize where to allocate resources before a crisis occurs.

3. AI-Powered Litigation Forecast: AI that predicts your chances in court, turning legal strategy from a gamble into a calculated decision, based on trends, case law, and judges’ history.

Until then we’ll fight it with humans

Until those products are built (and Hence is building some of them particularly around risk monitoring), smart lawyers will be the first port of call in the legal storm. As Basha Rubin, CEO and Co-Founder at Priori Legal noted, “This is exactly why the proliferation of AI is going to increase the demand for lawyers. By lowering the barrier to entry, there will be more lawsuits, more comments on regulation, etc. It won’t be the death of lawyers; in fact, we will need more lawyers than ever before.”

Until the high-tech defense solutions are built and adopted – and for many, even after that – this will no doubt be true.

That’s it for this week.

By Sean West & Steve Heitcamp

Leave a Reply